AI and APIs Are a Perfect Match

Two markets are accelerating in parallel, and their intersection is becoming one of the most important layers in modern software.

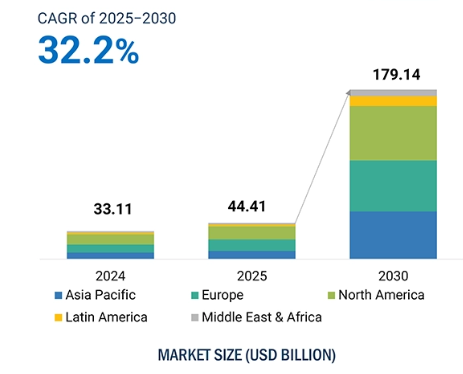

On the API side, the API management market alone is projected to grow from ~$8.86B (2025) to ~$19.28B (2030) (≈ 16.83% CAGR). (Mordor Intelligence) On the AI side, major analyst and enterprise forecasts increasingly point to trillion-dollar scale outcomes by 2030, with projections spanning everything from AI infrastructure spend to broader economic impact. (Reuters) Even if you ignore the widest “AI will change everything” estimates and focus strictly on AI delivered as an interface, the AI API market (AI capabilities exposed via APIs) is projected to grow from $44.41B (2025) to $179.14B (2030) (≈ 32.2% CAGR). (MarketsandMarkets)

The conclusion is straightforward: AI is increasingly consumed as an API, and APIs are increasingly shaped by AI.

APIs are the delivery system for intelligence

Most AI value only becomes real when it’s embedded into products: search, support, fraud, personalization, content generation, workflow automation. In practice, that embedding almost always happens through an API boundary whether internal (service-to-service) or external (developer-facing).

That’s why “AI adoption” at the application layer often looks like this:

- A product calls an endpoint.

- The endpoint returns a prediction, generation, classification, ranking, or action.

- The product turns that output into user-visible value.

The API is the “last mile” of AI.

And the market is reflecting that: analysts are explicitly measuring an “AI API market” as a distinct category, with rapid growth driven by enterprises integrating capabilities like computer vision, speech, text analytics, document parsing, translation, and generative models into existing systems. (MarketsandMarkets)

Why this pairing is inevitable

1) AI is probabilistic; APIs are deterministic

APIs are contracts. Even when the backend is complex, the interface must be reliable: inputs, outputs, latency, error modes, rate limits, quotas. AI, by contrast, is probabilistic and often non-deterministic.

The API boundary is where you turn probabilistic intelligence into something engineers can safely build on:

- Explicit schemas

- Versioning

- SLAs

- Fallback behavior

- Observability and tracing

- Safety filters and validation

In other words: APIs make AI operational.

2) AI doesn’t replace software; it increases composability

As systems become more agentic, they don’t become less modular, they become more modular. Agents orchestrate tools. Tools are accessed through interfaces. Interfaces are APIs.

This is one of the quiet implications behind the “API-first” trend: organizations increasingly treat APIs as durable products and foundations for modern architectures, including AI-centric ones. For example, Postman’s State of the API report highlights broad adoption of API-first approaches across organizations. (postman.com)

3) The unit of work for AI is increasingly “a call”

AI workloads map naturally to request/response patterns:

- “Classify this document”

- “Extract entities from this text”

- “Generate a summary”

- “Rank these results”

- “Decide the next action”

Even when the underlying implementation is complex (tool use, multi-step reasoning, retrieval), the consumption model tends to collapse into “call an interface, get a result.”

That’s exactly why the AI API market is a useful lens: it captures how enterprises actually consume AI in production. (MarketsandMarkets)

What’s changing in API design because of AI

1) The interface is shifting from “data access” to “capability access”

Classic APIs expose data and CRUD operations. AI-flavored APIs increasingly expose capabilities:

- Understanding

- Transformation

- Decisioning

- Generation

This changes how developers evaluate endpoints. It’s less about “does it return the right fields?” and more about:

- Does it generalize?

- Does it handle edge cases?

- Does it degrade gracefully?

- Is output quality stable across time?

2) Latency becomes a product feature, not a metric

For many AI calls, latency determines whether the capability can be used in-line (live) or must be moved off-path (batch). That drives architectural decisions:

- streaming vs non-streaming

- async job APIs

- event-driven callbacks

- caching strategies

- partial results

3) Versioning becomes more subtle

With AI, you can change behavior without changing schemas. That’s both powerful and dangerous. Mature AI APIs increasingly need:

- model/version pins

- reproducibility modes

- evaluation windows

- change logs with behavioral diffs

- canary release paths

What’s changing in AI because of APIs

1) Distribution beats raw model quality

A slightly worse model with an excellent API often wins over a better model with poor integration. Because in production:

- uptime matters

- error modes matter

- billing and quotas matter

- documentation matters

- SDKs matter

- developer experience matters

APIs are a go-to-market for AI.

2) Tooling and observability become part of the “intelligence”

The real product is not “a model,” it’s a system:

- monitoring drift

- tracing calls

- collecting feedback

- measuring outcomes

- red-teaming and abuse detection

This is why AI consumed through APIs pushes teams toward platform thinking: you’re not shipping a static artifact, you’re operating a living service.

The economics: why APIs are becoming the AI business model

AI is expensive to run (compute, infra, reliability). The market is converging on usage-based economics because it maps naturally to the unit of consumption: requests.

That’s also why the “AI as APIs” framing matters: it’s not just technical, it’s economic. A large share of AI value will be captured at the interface layer where:

- usage is measured

- billing is enforced

- trust is accumulated

- reliability is proven

The growth projections for AI APIs (as a market category) reflect this reality: enterprises are buying AI as callable capabilities rather than as standalone research artifacts. (MarketsandMarkets)

A reality check: APIs already run at extreme scale

To understand why “AI + APIs” is the natural pairing, look at what modern API infrastructure already supports. Payment platforms, comms platforms, identity platforms, these systems operate on API traffic that is massive and continuous.

Even if specific numbers vary by source, the direction is clear: the internet is already an API-native environment, and AI is being poured into that environment through interfaces. (postman.com)

Where this goes next

When APIs become the primary delivery mechanism for intelligence, a few second-order effects follow:

-

Every team becomes an integration team. The competitive edge becomes how quickly you can compose capabilities.

-

“Model selection” becomes “endpoint selection.” Developers will increasingly choose intelligence the way they choose infrastructure: by reliability, latency, pricing, and ergonomics.

-

Software becomes more dynamic. Instead of shipping fixed logic, products will lean on callable capabilities that evolve raising the importance of evaluation, version control, and trust.

-

The interface layer becomes strategic. The companies and ecosystems that make AI consumption frictionless through clean APIs, strong guarantees, and transparent economics will shape how intelligence spreads.

That’s why AI and APIs are not just compatible. They’re converging into the same layer: the unit of intelligence in production is the API call.